All Categories

Featured

Table of Contents

- – 5 Easy Facts About Generative Ai For Software ...

- – Some Known Factual Statements About What Is A ...

- – 🔥 Machine Learning Engineer Course For 2023 ...

- – All about 🔥 Machine Learning Engineer Course...

- – About Best Online Software Engineering Cours...

- – Some Known Details About Leverage Machine Le...

My PhD was the most exhilirating and tiring time of my life. Instantly I was bordered by individuals who can solve difficult physics concerns, recognized quantum mechanics, and could develop intriguing experiments that got released in leading journals. I really felt like a charlatan the whole time. I dropped in with a great team that motivated me to explore things at my very own rate, and I invested the next 7 years finding out a ton of things, the capstone of which was understanding/converting a molecular characteristics loss feature (including those painfully learned analytic derivatives) from FORTRAN to C++, and composing a slope descent routine straight out of Numerical Recipes.

I did a 3 year postdoc with little to no artificial intelligence, just domain-specific biology things that I really did not discover interesting, and ultimately procured a job as a computer system researcher at a nationwide lab. It was a good pivot- I was a concept detective, implying I can get my own gives, compose papers, etc, however didn't need to instruct classes.

5 Easy Facts About Generative Ai For Software Development Shown

I still didn't "obtain" machine understanding and wanted to function somewhere that did ML. I attempted to get a job as a SWE at google- went via the ringer of all the hard concerns, and eventually obtained refused at the last step (thanks, Larry Web page) and went to function for a biotech for a year before I finally procured hired at Google throughout the "post-IPO, Google-classic" age, around 2007.

When I reached Google I swiftly checked out all the tasks doing ML and discovered that than ads, there actually had not been a great deal. There was rephil, and SETI, and SmartASS, none of which appeared also from another location like the ML I wanted (deep semantic networks). So I went and concentrated on various other things- finding out the distributed innovation under Borg and Colossus, and mastering the google3 stack and manufacturing atmospheres, primarily from an SRE viewpoint.

All that time I would certainly invested on maker learning and computer facilities ... went to composing systems that packed 80GB hash tables right into memory just so a mapmaker can compute a tiny component of some slope for some variable. Sibyl was really a terrible system and I got kicked off the group for telling the leader the best method to do DL was deep neural networks on high performance computing equipment, not mapreduce on affordable linux cluster makers.

We had the data, the formulas, and the compute, all at when. And also much better, you really did not require to be inside google to make use of it (other than the big information, which was changing quickly). I comprehend enough of the math, and the infra to lastly be an ML Engineer.

They are under extreme stress to get outcomes a couple of percent much better than their partners, and after that as soon as published, pivot to the next-next thing. Thats when I thought of among my laws: "The best ML versions are distilled from postdoc splits". I saw a couple of people break down and leave the market for good just from functioning on super-stressful tasks where they did magnum opus, however only reached parity with a competitor.

Charlatan syndrome drove me to overcome my charlatan disorder, and in doing so, along the way, I discovered what I was going after was not actually what made me happy. I'm far extra pleased puttering about making use of 5-year-old ML tech like item detectors to enhance my microscopic lense's capacity to track tardigrades, than I am trying to come to be a popular researcher who unblocked the tough troubles of biology.

Some Known Factual Statements About What Is A Machine Learning Engineer (Ml Engineer)?

I was interested in Device Knowing and AI in college, I never had the chance or patience to pursue that interest. Now, when the ML area grew tremendously in 2023, with the most recent technologies in huge language models, I have a horrible longing for the roadway not taken.

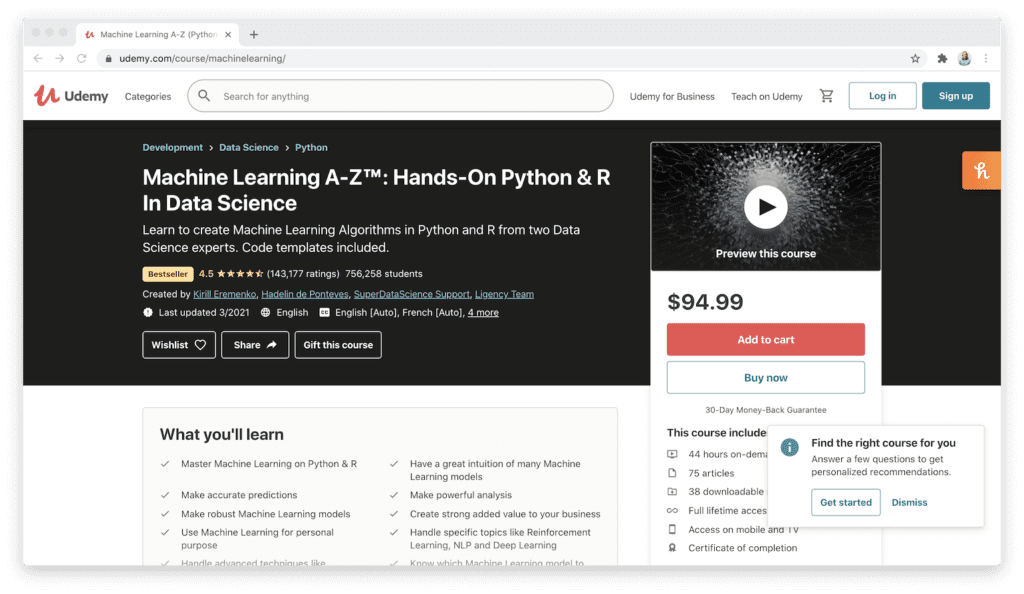

Partly this insane concept was additionally partially motivated by Scott Youthful's ted talk video entitled:. Scott discusses just how he ended up a computer scientific research degree just by following MIT curriculums and self examining. After. which he was additionally able to land a beginning placement. I Googled around for self-taught ML Designers.

Now, I am not certain whether it is feasible to be a self-taught ML designer. The only way to figure it out was to attempt to attempt it myself. However, I am positive. I intend on taking courses from open-source courses readily available online, such as MIT Open Courseware and Coursera.

🔥 Machine Learning Engineer Course For 2023 - Learn ... Can Be Fun For Everyone

To be clear, my goal below is not to build the next groundbreaking design. I simply desire to see if I can get an interview for a junior-level Artificial intelligence or Data Engineering work hereafter experiment. This is totally an experiment and I am not trying to change into a function in ML.

I intend on journaling concerning it weekly and recording everything that I research study. One more please note: I am not going back to square one. As I did my undergraduate level in Computer Engineering, I recognize a few of the principles required to draw this off. I have strong history expertise of single and multivariable calculus, direct algebra, and data, as I took these courses in school concerning a years ago.

All about 🔥 Machine Learning Engineer Course For 2023 - Learn ...

I am going to focus generally on Device Learning, Deep discovering, and Transformer Architecture. The objective is to speed up run through these very first 3 programs and get a solid understanding of the fundamentals.

Currently that you've seen the program recommendations, here's a fast guide for your understanding device learning trip. Initially, we'll touch on the requirements for many equipment learning training courses. A lot more sophisticated programs will certainly require the following understanding prior to beginning: Linear AlgebraProbabilityCalculusProgrammingThese are the basic elements of being able to understand exactly how equipment discovering jobs under the hood.

The initial program in this listing, Device Learning by Andrew Ng, has refresher courses on many of the mathematics you'll need, however it could be testing to find out equipment understanding and Linear Algebra if you haven't taken Linear Algebra before at the same time. If you require to review the mathematics needed, have a look at: I 'd recommend finding out Python considering that most of great ML courses use Python.

About Best Online Software Engineering Courses And Programs

Furthermore, one more excellent Python source is , which has lots of free Python lessons in their interactive browser setting. After finding out the prerequisite fundamentals, you can begin to actually comprehend just how the algorithms function. There's a base collection of algorithms in artificial intelligence that everybody must know with and have experience utilizing.

The programs provided over consist of essentially every one of these with some variation. Understanding how these techniques job and when to utilize them will certainly be essential when tackling brand-new jobs. After the basics, some even more sophisticated strategies to discover would be: EnsemblesBoostingNeural Networks and Deep LearningThis is simply a beginning, yet these formulas are what you see in a few of one of the most fascinating device learning solutions, and they're functional additions to your toolbox.

Understanding device learning online is difficult and very gratifying. It is necessary to keep in mind that simply enjoying video clips and taking tests does not indicate you're really learning the product. You'll find out also extra if you have a side project you're servicing that uses various data and has various other purposes than the training course itself.

Google Scholar is always a great place to begin. Enter keywords like "artificial intelligence" and "Twitter", or whatever else you're interested in, and hit the little "Produce Alert" web link on the entrusted to get emails. Make it an once a week behavior to check out those signals, scan via papers to see if their worth reading, and then devote to recognizing what's going on.

Some Known Details About Leverage Machine Learning For Software Development - Gap

Artificial intelligence is incredibly satisfying and amazing to discover and trying out, and I hope you found a course over that fits your own trip into this amazing area. Artificial intelligence comprises one component of Information Scientific research. If you're likewise interested in finding out about statistics, visualization, data analysis, and more be sure to look into the top information science training courses, which is a guide that follows a comparable layout to this.

Table of Contents

- – 5 Easy Facts About Generative Ai For Software ...

- – Some Known Factual Statements About What Is A ...

- – 🔥 Machine Learning Engineer Course For 2023 ...

- – All about 🔥 Machine Learning Engineer Course...

- – About Best Online Software Engineering Cours...

- – Some Known Details About Leverage Machine Le...

Latest Posts

What To Expect In A Faang Technical Interview – Insider Advice

How To Write A Cover Letter For A Faang Software Engineering Job

How To Ace The Software Engineering Interview – Insider Strategies

More

Latest Posts

What To Expect In A Faang Technical Interview – Insider Advice

How To Write A Cover Letter For A Faang Software Engineering Job

How To Ace The Software Engineering Interview – Insider Strategies